Graphics Tech in Cesium - The Graphics Stack

Cesium resembles a general graphics engine, but as we move up the layers of abstraction in Cesium, classes become more specific to Cesium’s problem domain: virtual globes. Here, we tour the full low-level Cesium graphics stack and make comparisons to graphics and game engines.

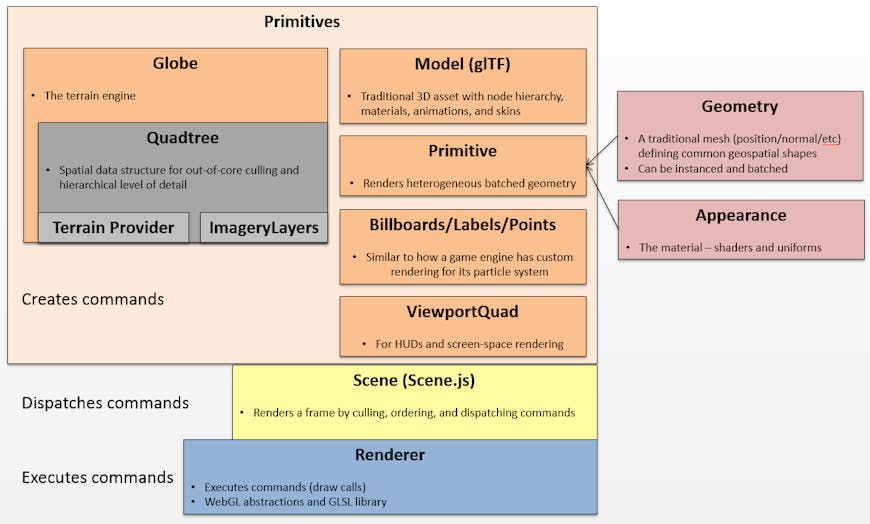

As described before, the lowest level of the stack is the Renderer, which is a WebGL abstraction layer that handles WebGL resource management and Draw Command execution. A command represents a WebGL draw call and all the state needed to execute it, e.g., shader, uniforms, vertex array, etc.

The next layer, built on the Renderer, is the Scene, which is responsible for rendering a frame by requesting commands from higher levels in Cesium, culling them, ordering them, and eventually dispatching them to the Renderer as described in the frame walkthrough.

The top layer in the graphics stack, built on the Renderer and the Scene, is the Primitives, which represent real-world objects that are rendered by creating commands and providing them to the Scene.

The Cesium Graphics Stack.

Note that in Cesium, both Scene and Primitives are in the Scene Directory. When we say Scene here, we mean Scene.js. A Primitive is defined as anything with an update function that adds commands to the Scene’s CommandList.

Globe

The Globe primitive renders the globe: terrain, imagery layers, and animated water. In a game engine, this would be the environment or level. A big difference with Cesium is the environment’s size and navigation constraints are unbounded. Most game engines will pack up each level into a format optimized for their runtime and load the entire thing into memory.

Cesium uses a quadtree, in geographic coordinates, for out-of-core hierarchical level of detail (HLOD) as described in [Ring13]. The QuadtreePrimitive, is not yet part of the Cesium API so beware of breaking changes if you decide to use it anyway. Terrain tiles contain geometry. A set of them approximates the globe’s surface for a given view.

At runtime, imagery tiles from one or more imagery layers, are mapped to each terrain tile. This enables runtime flexibility and support for open standards, at the cost of fairly complex code and some overhead like reprojecting non-native imagery projections. This is quite a bit different than a game engine, which would generally combine geometry (terrain) and texture (imagery) into a custom format optimized for runtime loading and rendering, but without as much runtime flexibility.

When the Scene calls the Globe’s update function, the quadtree is traversed and commands are returned for terrain tiles. Each command references textures for each overlapping imagery tile and uses a procedurally-generated shader based on the number of overlapping imagery tiles and other conditions such as if lighting, post-processing filters, or animated water is enabled. The shader is generated using an uber shader and string concatenation.

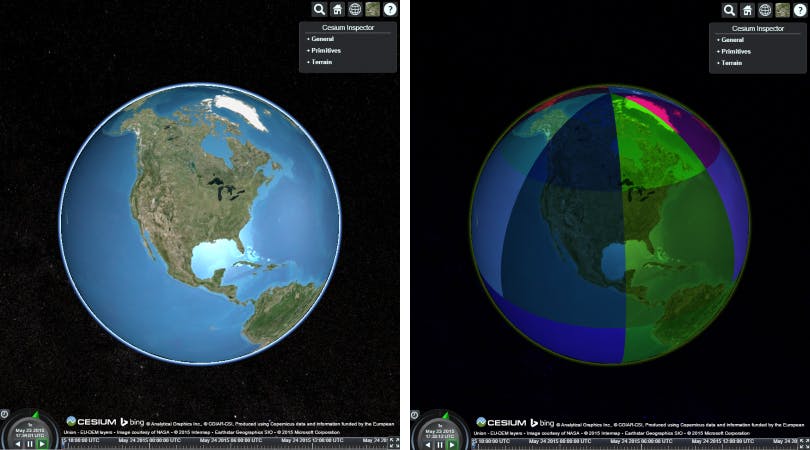

Left: The default view in Cesium using 12 commands to render the globe. Right: Visualization of each command usingviewer.scene.debugShowCommands = true;.

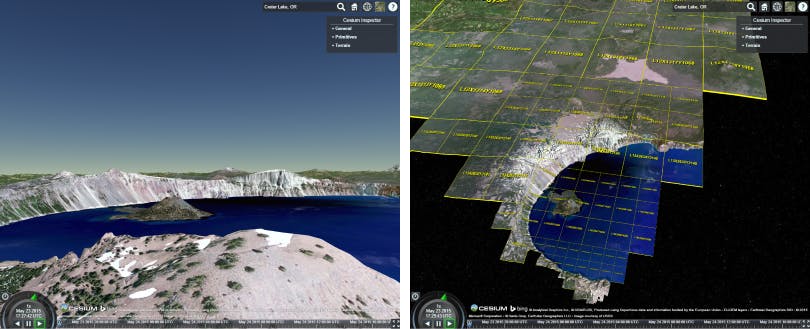

Left: Zoomed in view of Crater Lake looking towards the horizon using 186 commands. Right: The same commands with their tile coordinates from a different perspective. Horizon views are challenging to optimize and are one reason why 3D is much harder than 2D.

Model

The next most complicated primitive is the Model, which represents a traditional 3D model like an artist would create in Blender, Maya, etc. In a game engine, this is generally called an asset or, well, a model, and is exported to a custom format optimized for runtime loading and rendering.

Cesium uses glTF, which is an initiative from Khronos for an open-standard runtime format for WebGL. Luckily, I learned about it early and became involved in the spec instead of rolling our own custom format.

When the Scene calls a Model’s update function, the model traverses its node hierarchy - if needed - and applies any new transforms, animations, or skins. There are several optimizations to make this as fast as possible such as a fast slerp and animating only targeted subtrees.

Shaders are loaded directly from the glTF file and a separate patched shader is used for picking. glTF semantics such as MODELVIEW are mapped to the uniform state used to set Cesium automatic uniforms such as czm_modelView.

A model may return one command or several hundred depending on how it was authored, how many materials it has, and how many nodes are targeted for animation. gltf-statistics is a utility to display these statistics.

Left: The Cesium milk truck model from the Google Earth Monster Milktruck port. Right: The milk truck is rendered with five commands (three visible from this view, note the different colored wheels).

Primitive

Cesium’s generic primitive, plainly named Primitive, is an area where Cesium is very different than a game engine. Game engines usually include a minimal set of primitives such as polylines, spheres, and polygons that are useful for corner cases such as debugging and Head-Up Displays (HUDs). Instead, game engines focus on the environment, assets, and effects such as lighting, particle systems, and post-processing.

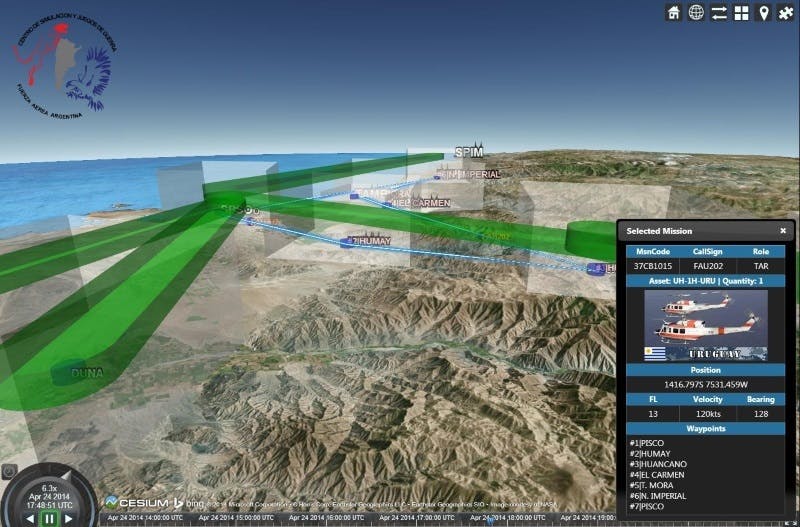

Cesium, on the other hand, includes a large library of geometries for common objects drawn on a globe such as polylines, polygons, and extruded volumes. Most Cesium apps, like the Air Tasking Orders Visualizer shown below, draw the majority of their content using geometries. This is quite different than a game, where most of the content is 3D model assets.

ATO Visualizer developed by the Wargaming and Simulation Center of the Argentinian Air Force.

Cesium is also unique in that its geometry tessellation algorithms are designed to conform to the surface of an ellipsoid such as Earth’s WGS84 ellipsoid, as opposed to a flat Cartesian world. For example, polygon triangulation includes an addition subdivision surfaces stage to approximate the curvature of the ellipsoid.

The tessellation pipeline also occurs at runtime using web workers, which enables support for open geospatial standards and file formats with concise geometry parameters instead of pre-tessellated geometry.

To minimize the number of commands executed, Cesium batches geometries into a single vertex buffer as long as the geometries share the same vertex format, e.g., position/normal cannot be batched with position/normal/texture-coordinate, and same appearance, which defines the vertex and fragment shader.

The final shader is procedurally generated by modifying the appearance’s vertex and fragment shaders to include high-precision rendering via Relative to Eye (GPU RTE) [Ohlarik08], 3D/2D/Columbus-View support, per-geometry show/hide, and unit vector compression [Bagnell15]. The runtime geometry pipeline, which batches geometries, makes the corresponding changes to the vertex attributes, e.g., separating the low/high position bits for GPU RTE, normal compression, and so on.

Billboards/Labels/Points

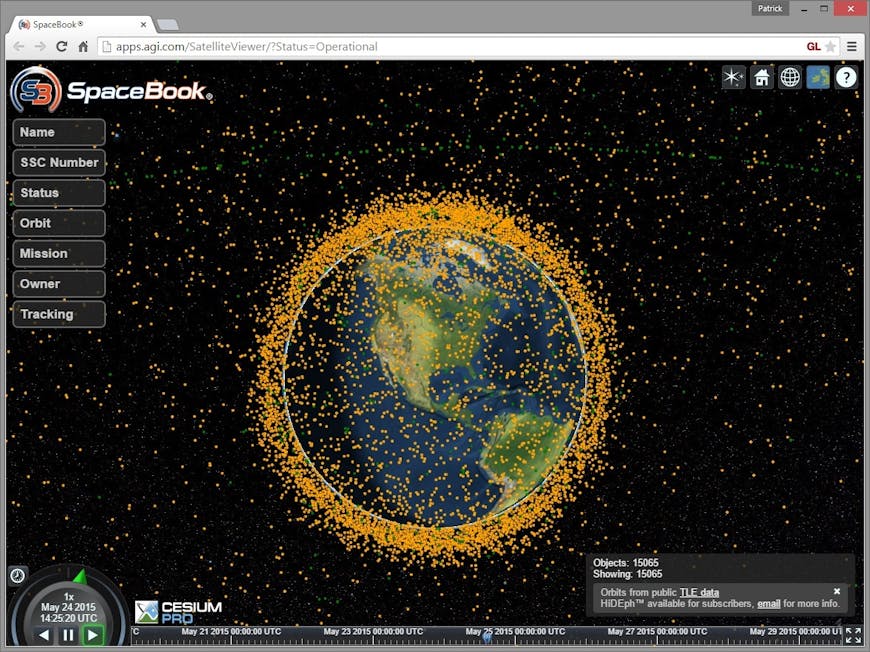

In a game engine, billboards are most often used for particle system effects such as smoke and fire. Cesium’s billboards are more general purpose, and often represent moving objects like the satellites in SpaceBook and the paragliders in Doarama as shown below.

SpaceBook developed by AGI showing 15K satellites.

Cesium’s billboards provide a lot of flexibility such as the ability to show/hide, set pixel and eye offsets, set screen-space rotation, and scale their size based on the distance. This can lead to “fat vertices” so Cesium uses packing and compression to minimize the number of vertex attributes [Bagnell15].

An uber shader uses the GLSL preprocessor to turn off unnecessary features so, for example, if no billboards are rotated, the vertex shader does not do any math for the rotation. The billboard’s vertex shader is fairly involved, but the fragment shader is trivial. This is common throughout Cesium, and the opposite of most games, which have complex fragment shaders and simple vertex shaders.

Billboards may be completely static, completely dynamic, or somewhere in-between where, for example, the position property for a subset of billboards changes every frame, but the other properties do not. To handle this efficiently, billboards dynamically groups vertex attributes into separate vertex buffers based on their usage so, for example, if only the position changes, the rest of the vertex attributes are not written.

To reduce the number of commands created, billboards are batched into a collection using a texture atlas that is created and modified at runtime. This is quite different than a game engine, which creates static texture atlases offline.

Text labels are implemented using billboards, where each character is one billboard. This has some per-character memory overhead, but does not increase the number of commands generated since the same texture-atlas-enabled batching is used as shown below.

Ayvri (renamed from doarama) developed by NICTA.

Example texture atlas for labels.

Billboards are also used to draw points like the satellites above; however, starting in Cesium 1.10, the new point primitive is more efficient. It’s not as general-purpose as billboards, so it has lower overhead like no index data and no texture atlas.

Everything Else

In addition to the primitives described above, there are several others:

- ViewportQuad - for 2D overlays.

- SkyBox, SkyAtmosphere, Sun, and Moon - for the stars and sky.

- Polyline - the original Cesium polyline before geometries were introduced. Still used for dynamic polylines. Polylines are surprisingly non-trivial to implement, see [Bagnell13].

References

[Bagnell13] Dan Bagnell. Robust Polyline Rendering with WebGL. 2013.

[Bagnell15] Dan Bagnell. Graphics Tech in Cesium - Vertex Compression. 2015.

[Ohlarik08] Deron Ohlarik. Precisions, Precisions. 2008.

[Ring13] Kevin Ring. Rendering the Whole Wide World on the World Wide Web. 2013.