Testing Improvements in CesiumJS

Recently, we have made some improvements to the testing setup in CesiumJS as part of a larger effort to modernize the CesiumJS development environment. This investment in the CesiumJS infrastructure is designed to align with the latest standards of web development and to simplify the process of contributing. In this post we’ll share the testing process for CesiumJS so you can more easily write and maintain unit tests as you contribute.

Testing is central to CesiumJS. Because CesiumJS is used in such a diverse array of environments, comprehensive testing is the only way to ensure that the thousands of apps built on CesiumJS continue to run smoothly. Thanks to the testing practices of the Cesium community, we are able to maintain a massive code base that is updated monthly and with over 250 contributors.

More than half of the code in CesiumJS is unit tests (218,228 lines of JavaScript in the Source folder vs 263,264 lines in Specs). These unit tests help validate both existing code and future changes. By frequently testing code locally and automating test runs in a CI pipeline, we can be reasonably confident that new changes do not introduce any bugs or regressions. This means we can move quickly and confidently with merging pull request branches into main, and deploying more fixes and features during our monthly releases.

Because testing is so important to developer workflow, our testing code is held to the same standards as the engine code: it should be well-organized, cohesive, loosely coupled, fast, and peer-reviewed. With testing at the forefront of our minds, we specifically design engine code to be easily testable.

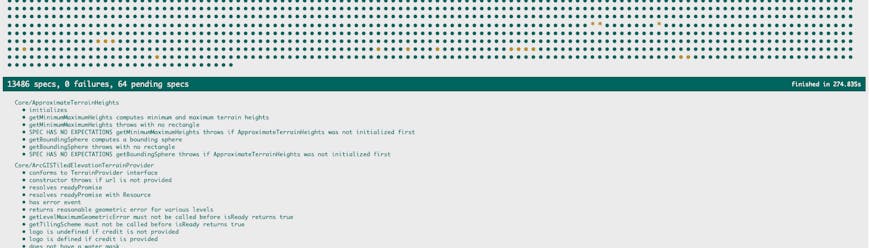

At Cesium, we use Jasmine, a behavior-driven testing framework, which calls a test a “spec” and a group of related “specs” a “suite.” One benefit of well-organized specs and suites is that their names often self-document the code by describing the expected behaviors.

Test suites are defined with a describe block, and each suite contains one or more unit tests defined with it blocks:

describe("Core/Cartesian3", function () {

it("construct with default values", function () {

const cartesian = new Cartesian3();

expect(cartesian.x).toEqual(0.0);

expect(cartesian.y).toEqual(0.0);

expect(cartesian.z).toEqual(0.0);

});

});

The above code represents a test suite for Core/Cartesian3, which contains a single unit test that validates behavior of the default Cartesian3 constructor. In CesiumJS, the organization of our specs directory mirrors that of the Source/ directory and describe blocks are typically named according to the path of the associated file in Source/.

We recommend a few best practices for writing tests:

- Unit tests should test isolated “units” of expected behavior. Each test should rely on as few moving pieces as possible in order to pass.

- Tests should have as close to 100% code coverage as possible. This means every functional line of code should be reached at least once by a corresponding test.

- Tests should run quickly and efficiently. Reducing friction when running tests encourages us to test frequently.

- Unit tests should work on all supported browsers and devices. In some cases, this means tests should return early when they rely on features that not all browsers support (i.e., WebGL2).

- Running tests should produce the same outcome regardless of the order in which the tests were executed. By default, Jasmine runs all tests in a random order each time. In CesiumJS, this is an area we’re looking to improve in—due to some finicky tests it’s not always possible to randomize the order at the moment.

Together these practices help streamline not only our development, but also our Continuous Integration pipeline and release process. Every time a pull request is opened in the CesiumJS repo, we run an automated series of commands to make sure that Cesium still builds and tests as expected with the new code changes. In addition, we run tests on different operating systems at least before every monthly release. By making sure our tests run quickly with well-defined behavior on multiple machines with varying feature availability, we can rely on our tests to give an accurate report on whether a new feature will “pass” or “fail” in all environments when built and tested.

Running unit tests is a big part of the developer workflow. When testing changes or new features are added, unit tests should be written early and tested frequently. There are two main options for executing unit tests in CesiumJS:

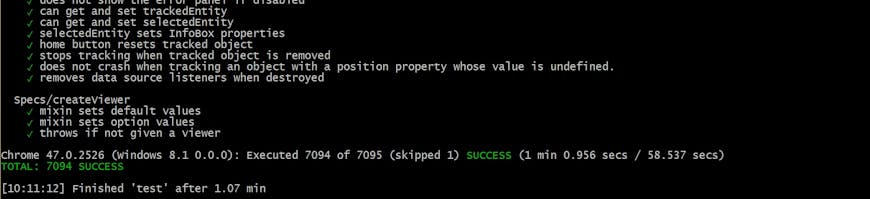

- On the command line using Karma, a tool for executing CesiumJS test code in a browser and displaying the results in the command line. The command for running tests with Karma is

npm run test.

- In a browser from a local server using Jasmine. The browser Spec Runner can be accessed at http://localhost:8080/Specs/SpecRunner.html.

Sometimes it’s useful to manually run only a selection of specs or test suites. This can be done using Jasmine’s “focus” syntax by wrapping a single spec with fit or a test suite’s describe block with fdescribe:

fdescribe("Core/Cartesian3", function () {

fit("construct with default values", function () {

const cartesian = new Cartesian3();

expect(cartesian.x).toEqual(0.0);

expect(cartesian.y).toEqual(0.0);

expect(cartesian.z).toEqual(0.0);

});

});

Similarly, specs and suites can be excluded with the xit and xdescribe syntax. In some rare cases, we find bugs in our tests that don’t have obvious fixes and that cause CI runs to fail on all pull requests. To avoid “false negative” build failures, we exclude these tests from running until the bugs are fixed.

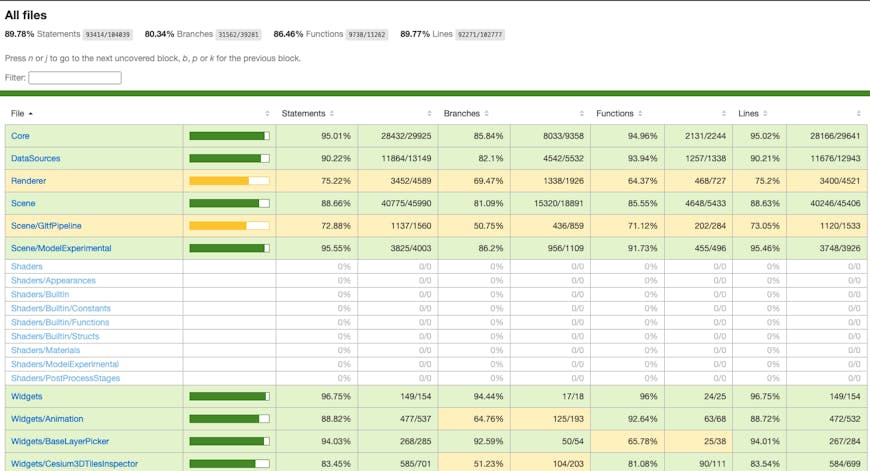

Code coverage is a metric expressing the percentage of lines of source code executed during unit tests. A higher percentage will generally result in higher quality, more reliable code. For instance, Cesium is currently at ~90% code coverage, and we strive for 100%.

Using Karma’s coverage reporter, we can generate an interactive summary of code coverage across all unit tests.

CesiumJS code coverage across all JS source code is 90%.

To generate this coverage summary, we can run npm run coverage on the command line. This will place a report inside of the Build/Coverage/<browser> folder and open your default browser with the result. To view it, navigate to http://localhost:8080/Build/Coverage/index.html in your browser.

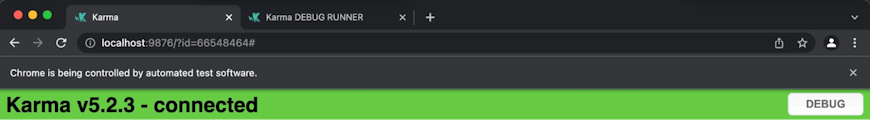

When trying to fix unexpected behavior, it’s often helpful to step through a test’s code (and the CesiumJS functions it calls) in a debugger. Both the command line and browser options for running specs can be connected to a browser debugger:

- On the command line, the

--debugflag can be added (i.e.,npm run test -- --debug) to prevent Karma from closing its browser window as soon as the tests complete. From Karma’s browser window, the Debug button will open a new tab where the source code for tests and the CesiumJS engine are accessible through the browser debugger.

- From the Spec Runner webpage, the source code for tests and the CesiumJS engine are accessible through the browser debugger.

Testing is crucial to maintaining high-quality software, especially because so much of CesiumJS needs to be reliable, performant, and accurate across a wide variety of use cases. To decrease the barrier to entry for writing tests, we aim to make our testing setup as streamlined and accessible as possible. For a full list of testing options, see our CesiumJS Testing Guide on GitHub.

Looking forward, we plan to continue our effort to modernize CesiumJS with changes that may improve our testing workflow even further. For example, we are currently researching alternative build systems that may support features such as watch tasks to automatically run tests when the affected code changes locally. If you have any feedback or ideas about testing in CesiumJS please reach out on our community forum, where we’ll also post any big future announcements.