The Next Generation of 3D Tiles

Nearly two years ago, we announced the 3D Tiles initiative for streaming massive heterogeneous 3D geospatial datasets. It is amazing and humbling to see how much of it has come to fruition and how a vibrant community has formed around 3D Tiles.

With this initial success, we now have the foundation for modern 3D geospatial: Cesium as the canvas, 3D Tiles as the conduit, and the seemingly endless stream of geospatial data as the supply.

The data acquisition trends are clear: we are collecting more data, more frequently, at a higher resolution and lower cost than ever before, and the data is inherently 3D, driven by heterogeneous sources such as photogrammetry and LIDAR.

The challenge now is to realize the full value of these data. With your help, of course!

We are looking for input on the next generation of 3D Tiles: 3D Tiles Next.

Our vision includes

- A truly 3D vector tile for heterogeneous classification

- Time-dynamic streaming

- A 3D analytics-enabled styling language

- Fast compression

- Implicit tiling schemes

- An even more engaged community and ecosystem

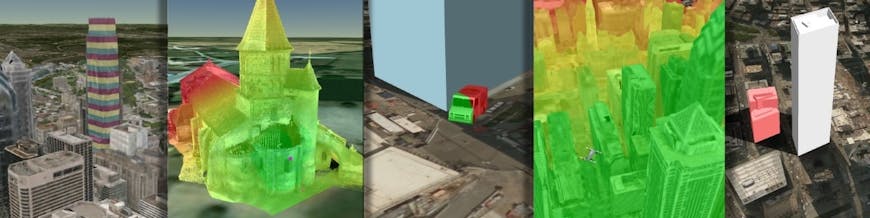

A truly 3D vector tile for heterogeneous classification

The vector tile in 3D Tiles will go beyond the traditional visualization of points, polylines, and polygons clamped to terrain. Vector tiles will clamp to any surface, from photogrammetry models to point clouds, at any orientation, from highlighting the roads in a photogrammetry model, to the floors of a 3D building, to the chairs in a CAD model.

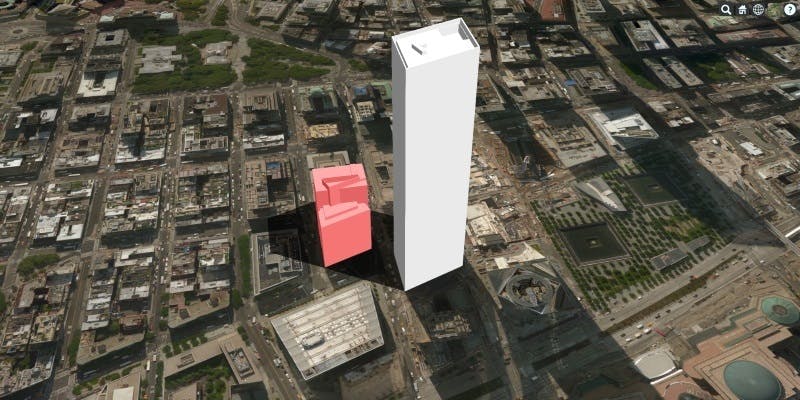

Business corridors vector tileset clamped to a city photogrammetry tileset.

Nominal vector tileset representing floors in a building showing the 3D nature.

In addition to supporting the 3D counterparts of points, polylines, and polygons, vector tiles will support 3D geometries such as boxes and cylinders and a general 3D mesh. The intersection of these 3D volumes with another 3D tileset—such as terrain or a photogrammetry model— defines the surface of the vector tile and allows the vector tile to bring multiple non-uniform layers of shading and semantics to a 3D tileset.

3D Tiles declarative styling will be used to concisely describe how the vector tile shades the base 3D tileset.

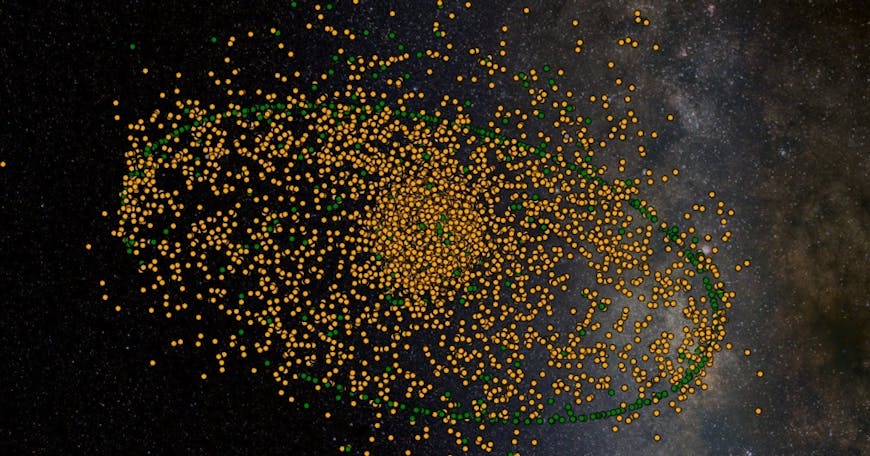

Time-dynamic streaming

With commodity drones, autonomous cars, and generally low-cost and easy data acquisition methods, data is frequently collected for the same area for anything from construction progress to coastal erosion to snow thickness to mining.

Impact of coastal erosion in NightCliff, Darwin by AEROmetrex.

The first challenge is to stream these temporal datasets so smoothly that we can drag a time slider to stream in new “frames” of the tileset. This is basically streaming video meets massive 3D geospatial datasets, except the video is interactive. It is one of the hardest open problems in the geospatial field. We are well positioned to tackle it given our current success with time-dynamic visualization.

Tracking 16,875 space objects in SpaceBook by AGI.

Once the foundation for time-dynamic 3D Tiles is laid, things will get more interesting: how do we show the difference between two frames of a time-dynamic tileset and how do we automatically derive semantic meaning from the differences?

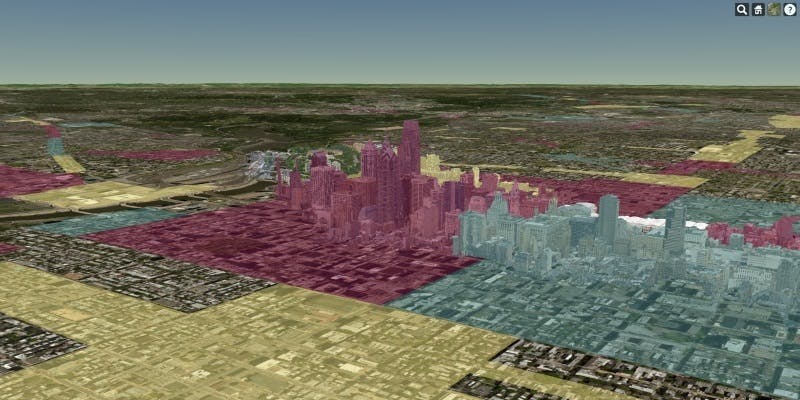

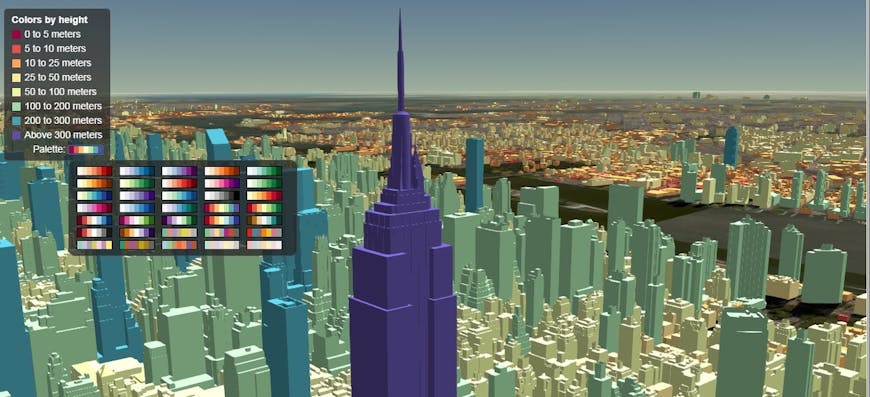

An analytics-enabled styling language

Today, 3D Tiles declarative styling is a concise language for stylizing features with expressions containing per-feature properties. This can be as simple as mapping a building’s height to a color ramp or as complex as determining the color of each point in a point cloud with a function taking into account the point’s intensity, GPS time, and classification, and determining the point’s size based on its distance to the viewer.

Height-based color ramp for 3D buildings.

The next step is to bring 3D analytics to declarative styling so we can take into account the 3D geospatial context of each feature. For example, to support shadow studies, is a feature shadowed by other features and the rest of the 3D scene at a given time?

{

"color" : "inShadow(${simulation_time}) ? color('red') : color('white')"

}

The building in shadow is shaded red.

When the sun position changes, the building is no longer in shadow.

Visibility analysis will be just as concise.

{

"color" : "isVisibleFrom(${anotherFeature.location}) ? color('green') : color('red')"

}

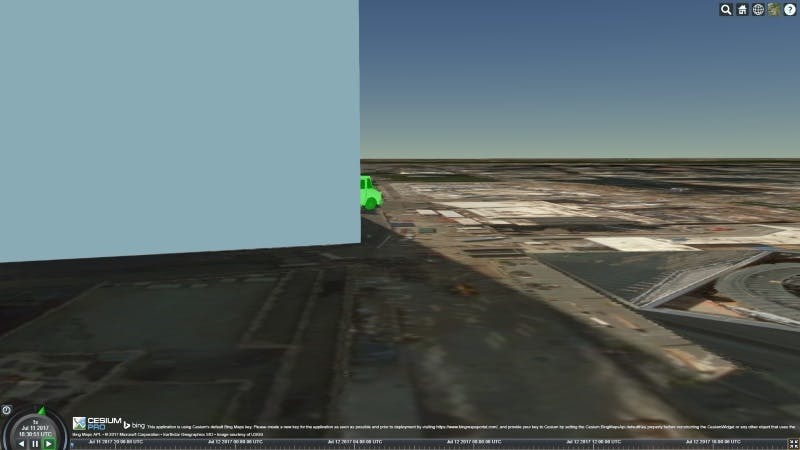

The visible part of the truck is shaded green

The invisible part of the truck from the above perspective is shaded red.

{

"color" : "ramp(palette, distanceTo(${anotherFeature.location}))"

}

Fast compression

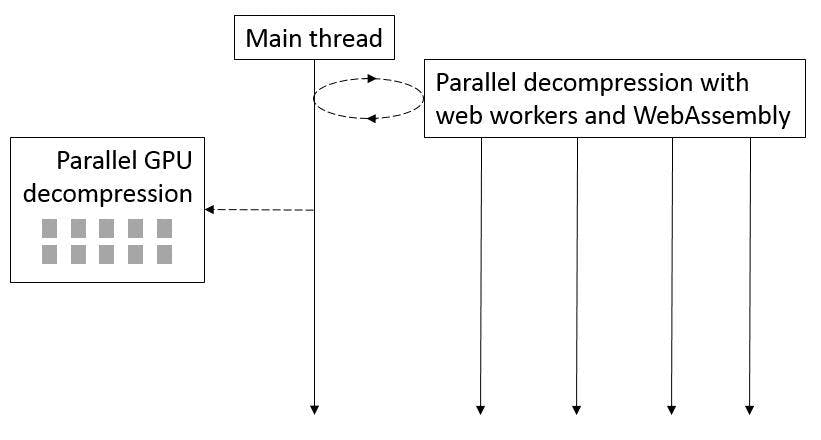

To meet the demands of ever-increasing resolution and time-dynamic tilesets, we need more than the current GPU decompression with quantization and octencoding. We plan to adopt the latest mesh and texture compression designed for streaming graphics applications with fast decode requirements.

Parallel decompression with the GPU and web workers.

Through Khronos and glTF, we are collaborating with the Google Draco team on open mesh and point cloud compression. Draco has open source encoders and decoders, a mesh compression ratio of 10-25x vs gzip with 110 MB decoded in 1 second in JavaScript, and a point cloud compression ratio of 3.6x vs gzip with 16.7M points decoded in 2.2 seconds in JavaScript. To top it off, Draco is constantly improving.

Implicit tiling schemes

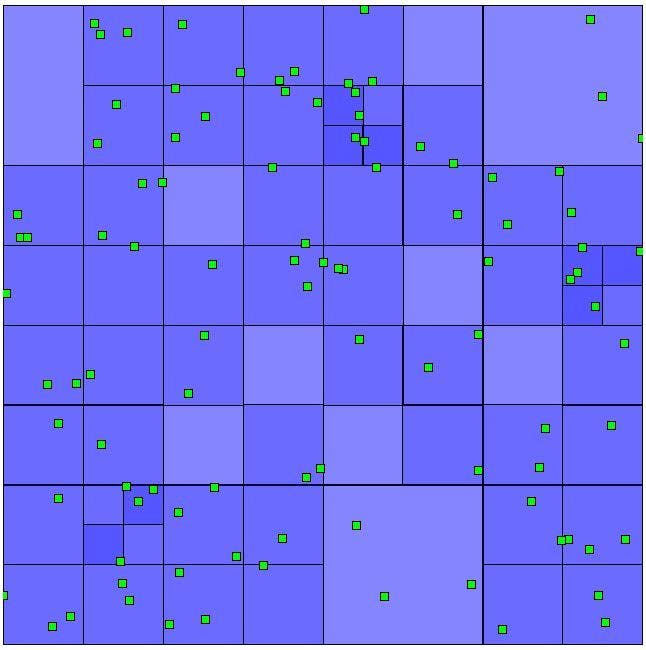

To facilitate optimal subdivisions for diverse datasets, 3D Tiles has a flexible tiling scheme. To better support dynamically generated tilesets, uniform global-scale datasets, and time-dynamic datasets, 3D Tiles Next will have concise support for well-known and widely-used tiling schemes for spatial data structures such as octrees and quadtrees, avoiding the need to explicitly define the relationship of parent and child tiles.

A traditional quadtree.

An even more engaged community and ecosystem

We were energized to see such early adoption of 3D Tiles well before the spec or Cesium implementation took shape. Now that the implementation is officially shipping in Cesium 1.35, the final steps of the OGC community process are approaching, and solid progress is being made on the 3D Tiles validator, we expect to see even broader adoption.

We are building the commercial Cesium Composer platform to provide 3D tiling for a diverse array of datasets such as photogrammetry models, CAD, 3D buildings, and point clouds. We expect to see many 3D Tiles projects—from exporters to converters to rendering engines—continue to emerge from the Cesium team and the entire community.

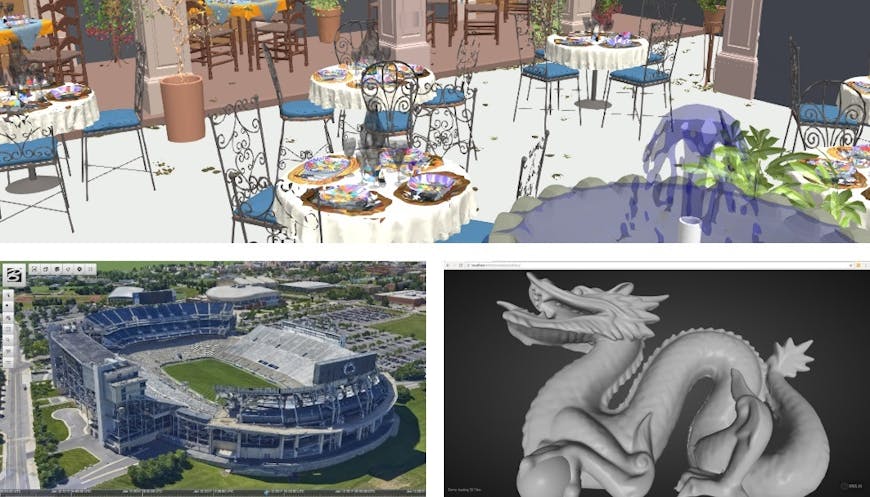

Top left: Indoor CAD scene tiled with Cesium Composer.

Bottom left: Photogrammetry model exported from Bentley ContextCapture. Bottom right: 3D tileset rendered in OSG.JS.

We want to hear what you are building with 3D Tiles and what you want to see in 3D Tiles Next.