Cesium ion’s CI/CD Pipeline

Cesium ion SaaS handles a wide range of functions and serves a global user base, requiring rapid updates and bug fixes. To deliver at this pace, Cesium minimizes the time developers spend away from feature development. An automated testing and deployment pipeline is key: It allows developers to focus on adding value for users while automation handles the operational aspects of deployment.

Although setting up an automated CI/CD (continuous integration/continuous deployment) pipeline involves a large up-front investment in engineering resources, the long-term benefits are substantial. The efficiency gained through reduced development friction and accelerated deployment far outweighs the initial cost. In this blog, we share Cesium ion’s automated CI/CD pipeline, which closely follows AWS’s recommended practice and enables several deployments a day. This enhances our ability to deliver consistent value to our users.

The pipeline

The first step is to run automated tests, which include unit and end-to-end tests, to ensure the new code is up to our engineering standards. Our CI infrastructure, powered by Github Actions, is responsible for running these tests for every commit on all repositories that are part of ion. Beyond testing, GitHub Actions also handles code containerization, linting, and automated security scanning. The extensive coverage of these automated tasks enables us to ensure each commit is a deployment-ready update to Cesium ion.

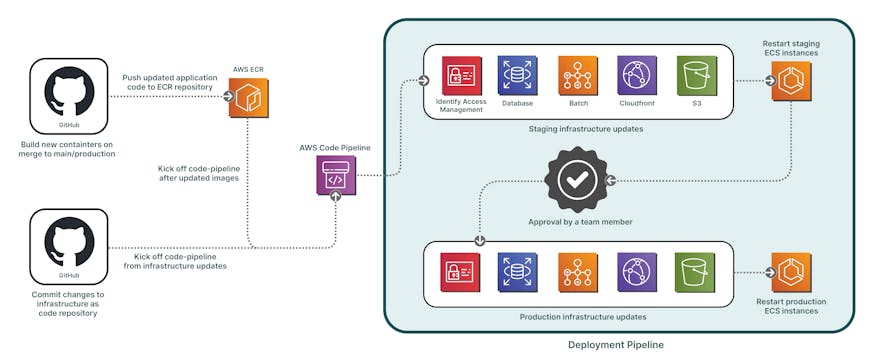

Once the above CI checks pass on a pull request, and the code has been through a pull request review process, the next step is to execute our CD pipelines. Cesium ion operates three deployment environments: development, staging, and production. We manage these environments with two distinct CD pipelines. We use a branching strategy for our repositories, in which the main branch always contains the latest changes, while the production branch represents what has been deployed. Changes to the development environment are automatically deployed with every merge to the main branch, providing us with an always up-to-date environment to test the latest functionality. Because the CI directly kicks off the development environment pipeline, it is easy to point the development pipeline to a feature branch and deploy it to do testing in a realistic environment before merging the code to main. The second pipeline manages deployments to staging and production. It is triggered automatically anytime a commit is made to the production branch in any one of our repositories. See the graphic below for details about how these pipelines deploy:

Deployment pipeline for infrastructure and application code for Cesium ion SaaS.

Continuous deployment

The containerized images from the above CI steps are pushed to AWS Elastic Container Registry (ECR) for deployment. In the development environment, these images are directly pushed to the development AWS account. For staging and production environments, the images are pushed to a “SharedServices” AWS account, which manages cross-account permissions and deployment processes.

All of the infrastructure within our AWS accounts is stored in AWS CloudFormation templates (Infrastructure as Code). The CD pipeline also updates various AWS components, including policies, alarms, notification topics for services like RDS PostgresQL, Elastic Container Service (ECS) clusters, Route 53, AWS WAF, CloudFront, and AWS Batch. These CloudFormation templates are deployed to the respective AWS account through the CI in the infrastructure repository. Updates to these container images in ECR or CloudFormation templates trigger an AWS CodePipeline execution. The pipeline uses the new images to update the AWS ECS cluster and the CloudFormation templates to update policies and infrastructure settings. The pipeline first applies necessary infrastructure updates before deploying the updated images to AWS ECS. We have automated rollbacks and alerts configured to address any issues if a new container instance fails to start.

The development and staging pipeline, managed by “SharedServices,” first pushes all updates out to staging, and then, as a second step, performs the updates in production. For quality control, we have included a review step between the staging and production deployments, though our setup allows for automated production deployment if necessary. In addition to managing container images and infrastructure updates, our CD pipeline performs several other tasks. For our front end, which consists of statically hosted files, the pipeline handles compilation and transpilation during CI, with the files then pushed directly to the respective accounts. For our AWS Lambda and Lambda@Edge functions, the pipeline generates a hash based on the function code and compares it with the existing production version. If there is a mismatch, the updated lambda function is pushed when the pipeline is triggered. The primary reason for doing deployments only when we detect code changes is to speed up overall deploy time.

Conclusion

With our streamlined process, deploying changes to Cesium ion SaaS is extremely easy. Developers simply need to merge changes to main and production branches of the respective repositories, click a button, and make the feature available to millions of users worldwide.

Experience features from the advanced pipeline by signing up or signing in to your Cesium ion account.