The State of Cesium ion Architecture: The Infrastructure behind Cesium ion Self-Hosted

Cesium ion Self-Hosted is our solution for geospatial workloads that require isolated environments detached from the public internet or that require an application to be hosted in the cloud behind a user’s own identity provider. Cesium ion Self-Hosted is a Kubernetes based application that provides feature parity with Cesium ion SaaS by leveraging the same code base. The next evolutionary step in our product line, Cesium ion Self-Hosted caters to both small and large enterprises, empowering them with greater control over Cesium’s offerings. In this blog we provide an overview of the architecture behind the Kubernetes application.

Why Kubernetes and Helm?

As stated in our blog post describing the architecture of Cesium ion SaaS, Cesium ion is an amalgamation of small pieces that each perform a distinct function. Each piece is containerized individually for consistency between development and production. In the AWS deployment of our SaaS product, we use Amazon ECS to orchestrate spinning up, tearing down, and doing other management of the containers. For our self-hosted implementation, we keep the same architecture, but we can’t rely on Amazon services. We also need to integrate Cesium ion Self-Hosted into our users’ diverse environments, cloud providers, and infrastructure.

Kubernetes is a widely used open source container orchestrator that allows for declarative configuration of resources. Container orchestrator in this context means an automated system that spins up, tears down, and regularly checks resources based on the configuration provided. For our use case, Kubernetes acts as a drop-in replacement for AWS and was the obvious choice to use in the self-hosted product. Kubernetes scales across our users’ existing operations and provides them with a platform-agnostic solution.

Since many of our users already have sophisticated Kubernetes clusters set up, they would find it difficult to integrate pre-defined Kubernetes objects from Cesium. We needed some way of allowing users to specify their custom requirements in a configuration file and have our application change its behavior based on those requirements. We decided to leverage Helm, a package manager for Kubernetes, which allows a user’s desired configuration to be supplied to our application through a top level configuration file. This also makes bringing up the entire Self-Hosted application extremely easy since it only requires one command to install. Similarly, updates are also streamlined through Helm as it handles tearing down old deployments and spinning up new ones with just one command.

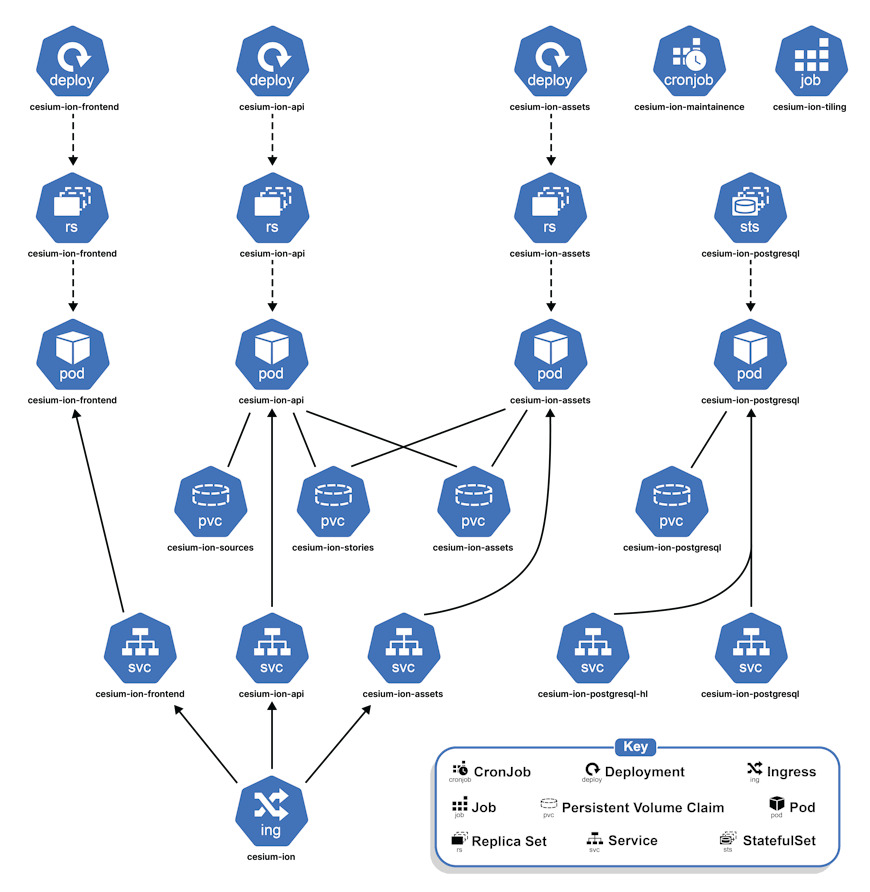

This diagram illustrates a high level view of the architecture that gets deployed in a Kubernetes cluster.

Differences between the architectures of Cesium ion SaaS and Self-Hosted

SaaS and Self-Hosted, while providing similar functionality, diverge due to their different deployment environments, necessitating different implementations. For instance, in our SaaS architecture blog we detailed how AWS S3 was perfect for our use case of storing a large amount of small files. But for a Self-Hosted product that is operating in an offline environment, backing up everything on a system like S3 is not possible and using services that imitate S3 can come with substantial financial cost. Below are some examples of differences between the two environments and the solutions we came up with for these deployment modes:

Asset hosting

In a Self-Hosted Kubernetes based environment, we need to provide a way for users to write data locally on their volumes mounted to the pods in the cluster. For this reason, we decided to implement a SQLite based solution. Since tiled assets can get extremely large, saving them as a “pile of files” on a file system can become challenging to manage. Instead, we decided to go with SQLite databases, facilitating ease of tiling and management of outputs.

Because these assets are stored in SQLite databases, we have developed a custom asset server written in Node.Js and Restify that handles serving these assets. It also manages authentication and authorization using temporary JWT credentials supplied from the API Server. Self-Hosted customers can expose the asset server with a CDN of their choice for edge caching to provide a similar setup to the one we have on SaaS.

The asset server also serves static assets like CZML, KML, GeoJSON, and glTF and images required by Cesium stories. The asset server is containerized and defined as a Kubernetes Deployment object. It is made to maximize performance by leveraging available CPU cores within its pod and dynamically scale instances based on resource utilization. It performs caching of individual elements stored in its asset databases by using an LRU (Least Recently Used) strategy.

API server

For our Self-Hosted offering, the API server leverages the same code as SaaS and performs an additional function: it accepts source asset files through an HTTP multi-part upload. The HTTP multi-part upload functionality replaces uploads done directly to AWS S3 in SaaS. The API server container is used within a Kubernetes deployment that scales the API server’s pods based on resource usage. These pods are exposed through individual ClusterIP services behind a Kubernetes ingress, an API object that exposes routes from outside the cluster to services within the cluster. TLS (Transport Layer Security) can be configured to work with this set up to provide secure HTTPS deployments.

The API server is backed by a SQL database to store asset and user information. Since the API server works with any SQL based database, users retain the flexibility of selecting their preferred SQL database provider to integrate with the API server.

Tiling

In Cesium ion Self-Hosted, we need some way of launching jobs for heterogeneous source data that all require different hardware configurations. This requirement is the same in SaaS, where we use AWS Batch to do this. In Self-Hosted we use Kubernetes Jobs that act as almost a drop in replacement for AWS Batch by providing a similar level of functionality. It allows us to run jobs in isolated containers while placing new jobs in a queue until resources become available.

Since the API server handles source file uploads, the same volume is mounted to the tiling container, which now has access to the source data. The tiling pipeline then processes this data and outputs SQLite files in cases of imagery, terrain, and 3D Tiles workloads. The reason for choosing databases is to allow the assets to be easily manageable by not placing millions of tiny files on a volume. This also makes it easy to ship over 470 million tiles in a single file to our customers as part of our global data offering.

Authentication

Cesium ion Self-Hosted defaults to single-user mode, which runs without a built-in authentication system and assumes all site visitors are the same user. To address authentication needs, Self-Hosted users have the option to integrate with their SAML providers, affording administrators control over user access within their organizations. This approach not only ensures ease of integration with enterprise authentication software but also enhances security compared to custom authentication implementations.

The API server, asset server, and front end SPA are all defined as Kubernetes deployments, allowing for horizontal scaling to accommodate high request usage. Each of these pods is exposed through a service sitting behind a Kubernetes ingress, enabling external user access. The tiling pipeline on the other hand runs in individual pods executed through Kubernetes Jobs. Shared volumes for both asset sources and tiled assets are mounted onto the API server and tiling pipeline. This approach simplifies the tiling process by eliminating the need to copy and move assets between volumes. Similarly, the tiled assets volume is also mounted onto the asset server to prevent duplication and optimize asset serving.

Lastly, Cesium ion Self-Hosted includes a PostgreSQL Helm chart provided by Bitnami, allowing users to swiftly set up a database instance, further enhancing deployment ease and efficiency. This instance can be replaced by a user-managed PostgreSQL instance.

Long before we released Cesium ion Self-Hosted, we'd been crafting it to suit customer use cases that ion SaaS can’t support. We continue to add new features as part of our roadmap to empower users with greater control over their workflows. Reach out to us to learn more about incorporating Cesium ion Self-Hosted into your environment.